Dance with Somebody

Haptic sensation in an online dance experience

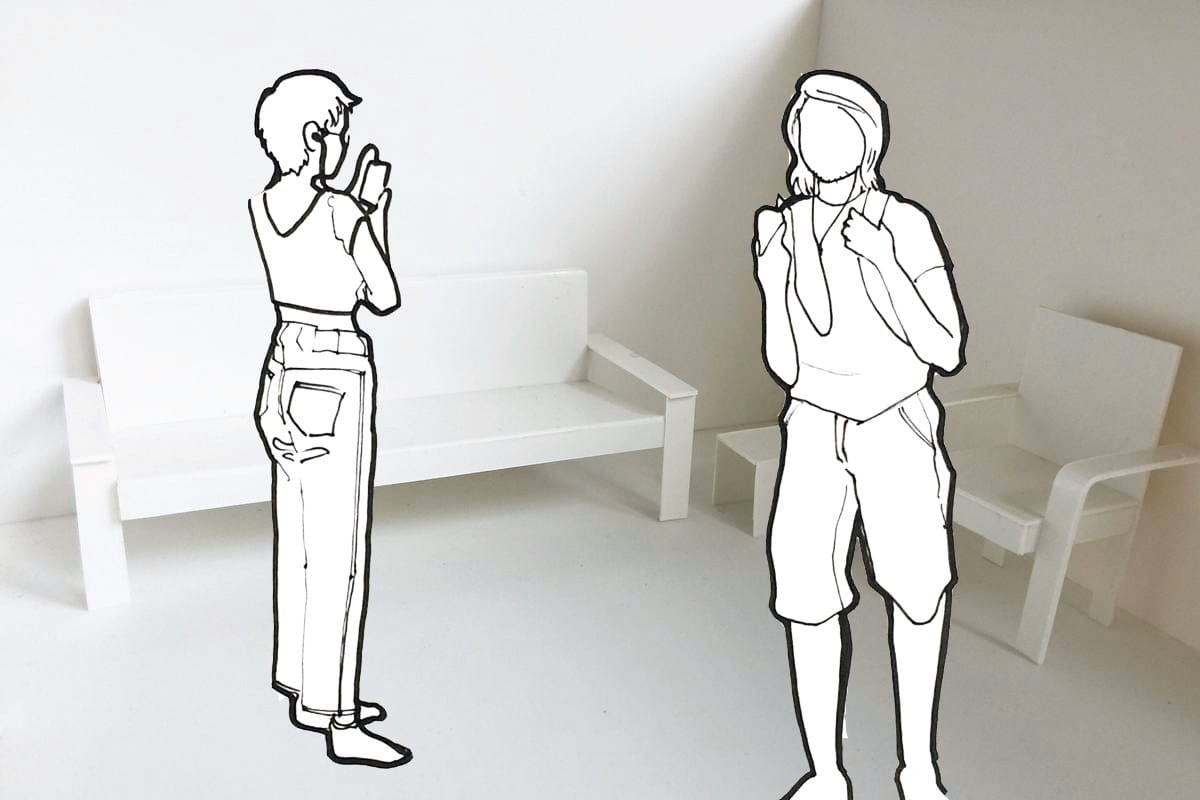

›Letting GO!‹ is a dance workshop that took place online during the COVID-19 pandemic. In times of social distancing, we yearn for human connection. The project examines how an online dance experience can go beyond the limited and everyday use of audio-visual means by incorporating haptic sensations. Although the screen is a window for communication, it also screens out the delicate nuance that we would perceive with our senses when dancing offline. How can an online experience between dancers become more immersive?

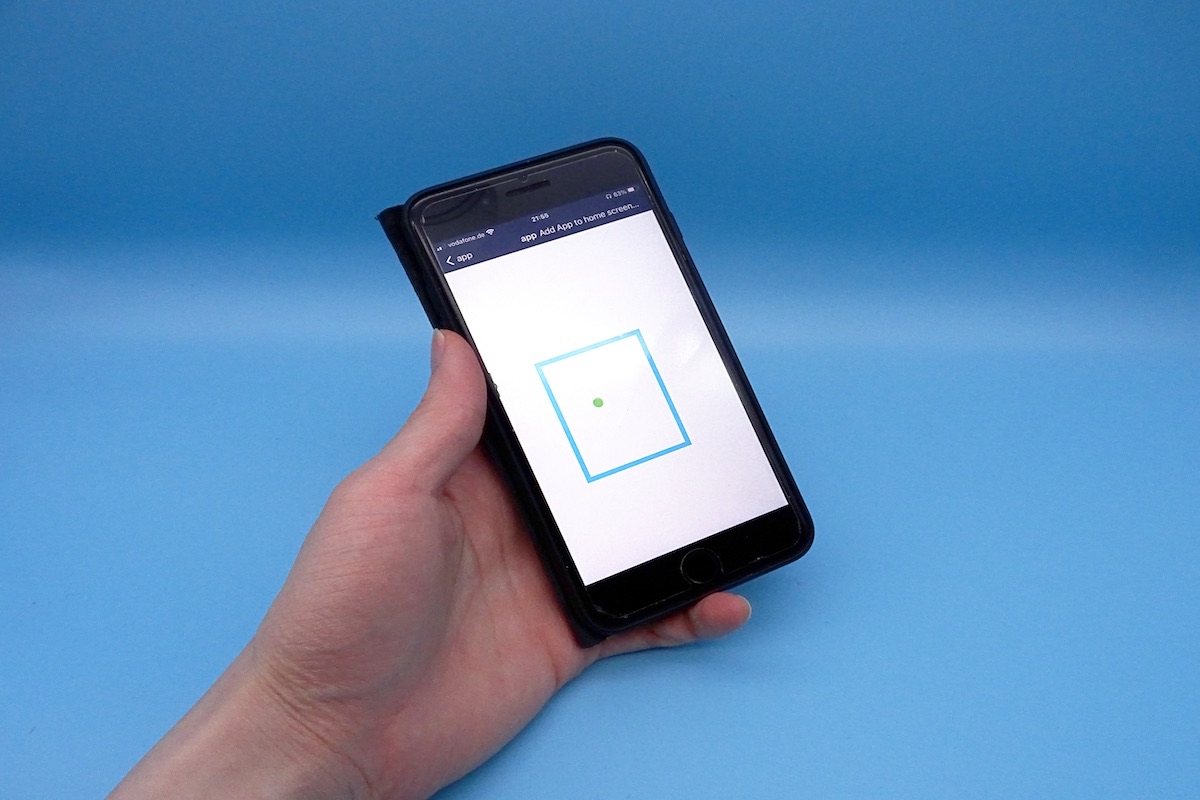

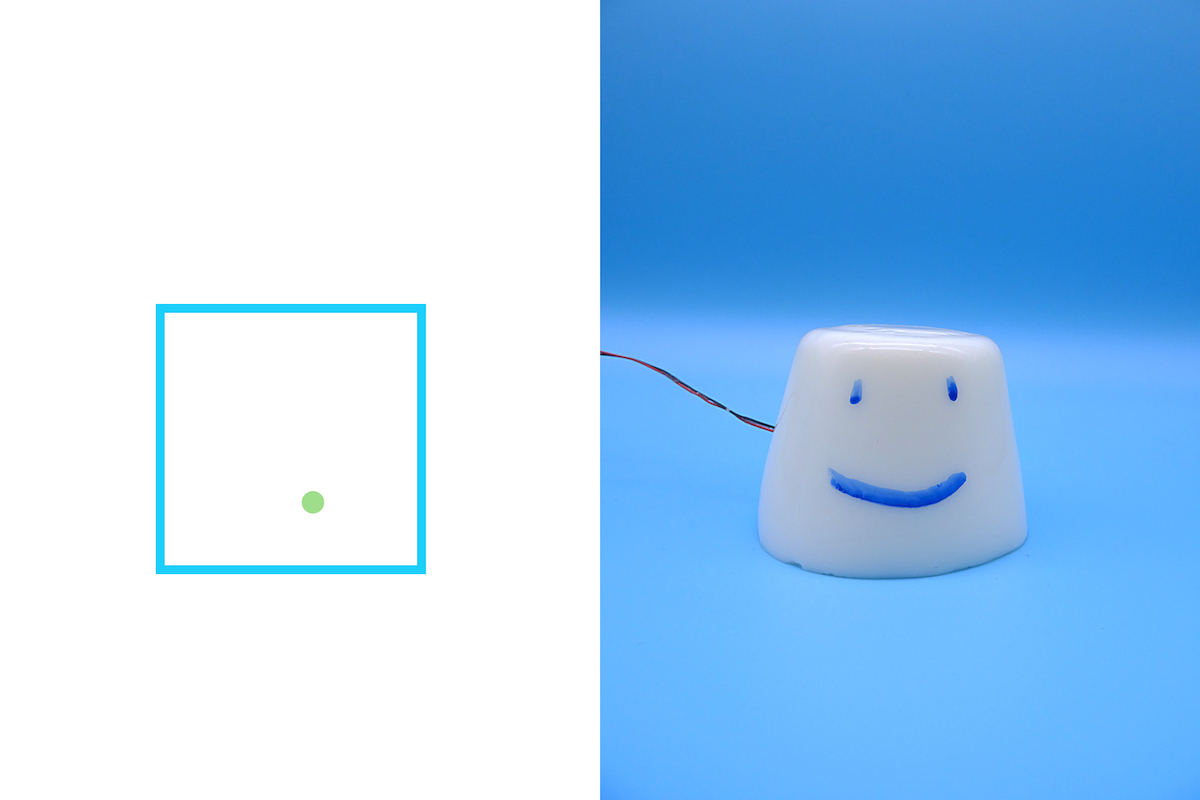

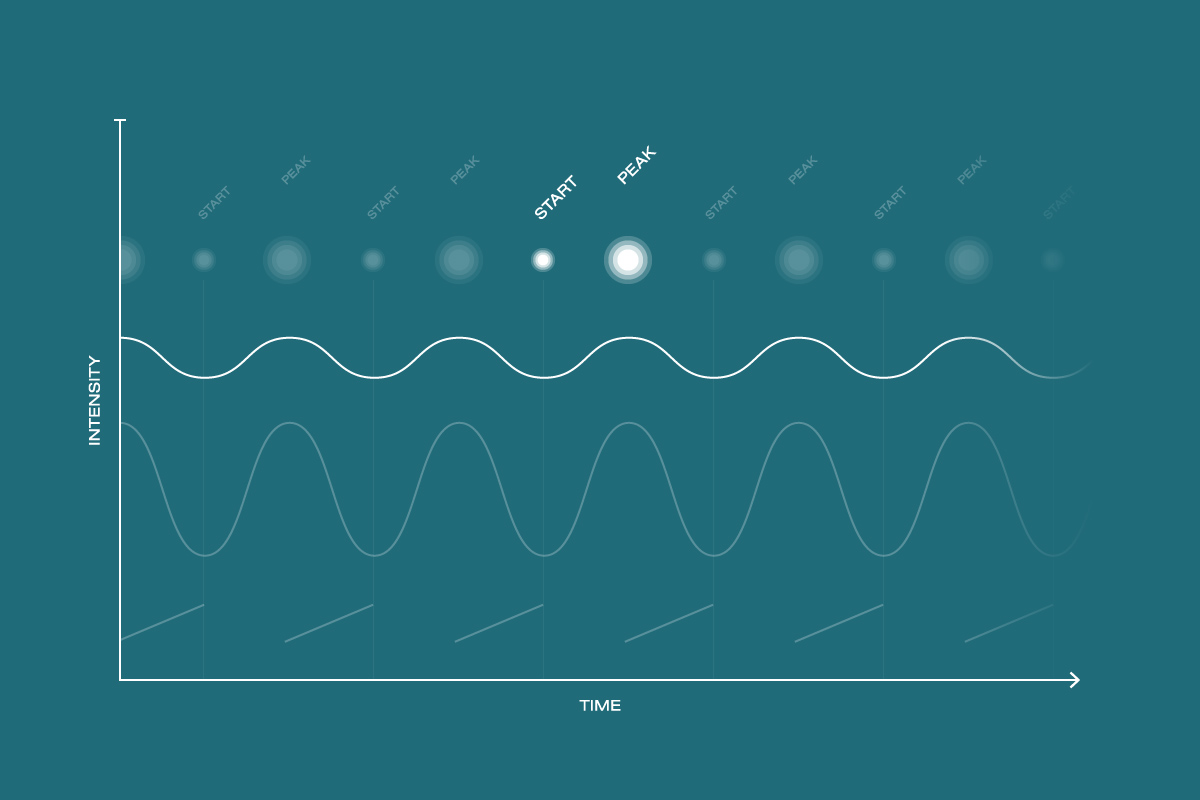

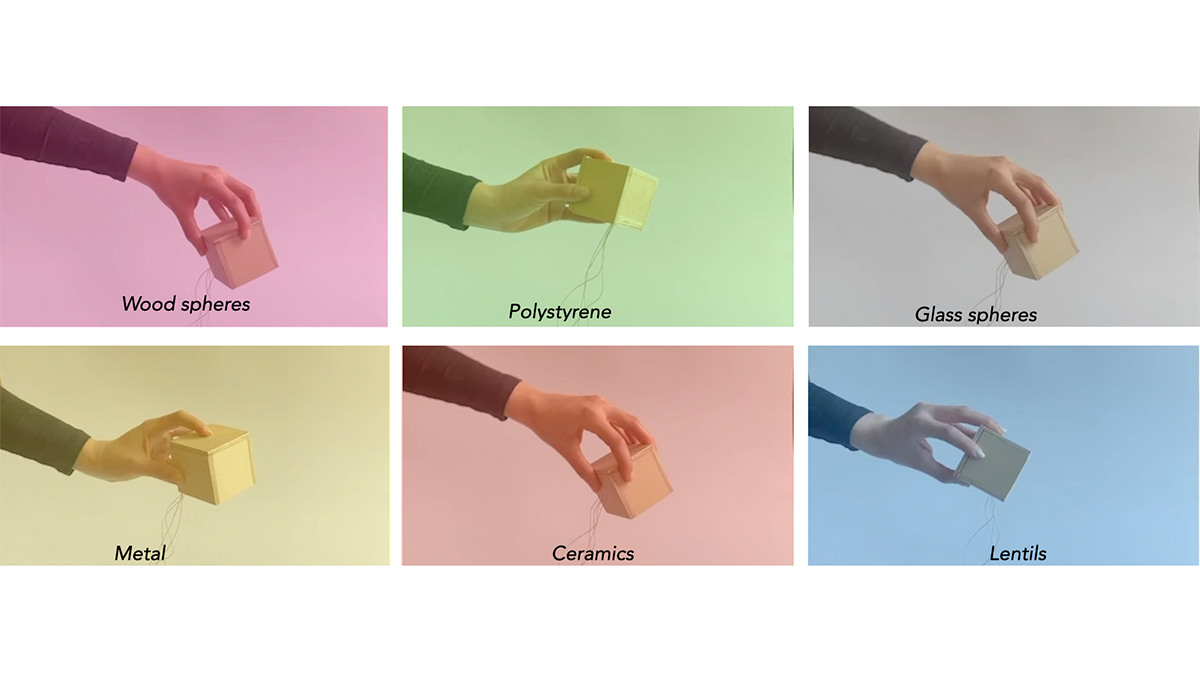

The first goal was to transmit a tactile sensations of a dance move from one dancer to the other. It was necessary to define and filter the ›moves‹ from all the motion data captured. To gather movement data, image targets were used to track the hand movements of dancers. Then, by calculating the acceleration, only the final position of the dance move was extracted.

The first goal was to transmit a tactile sensations of a dance move from one dancer to the other. It was necessary to define and filter the ›moves‹ from all the motion data captured. To gather movement data, image targets were used to track the hand movements of dancers. Then, by calculating the acceleration, only the final position of the dance move was extracted.

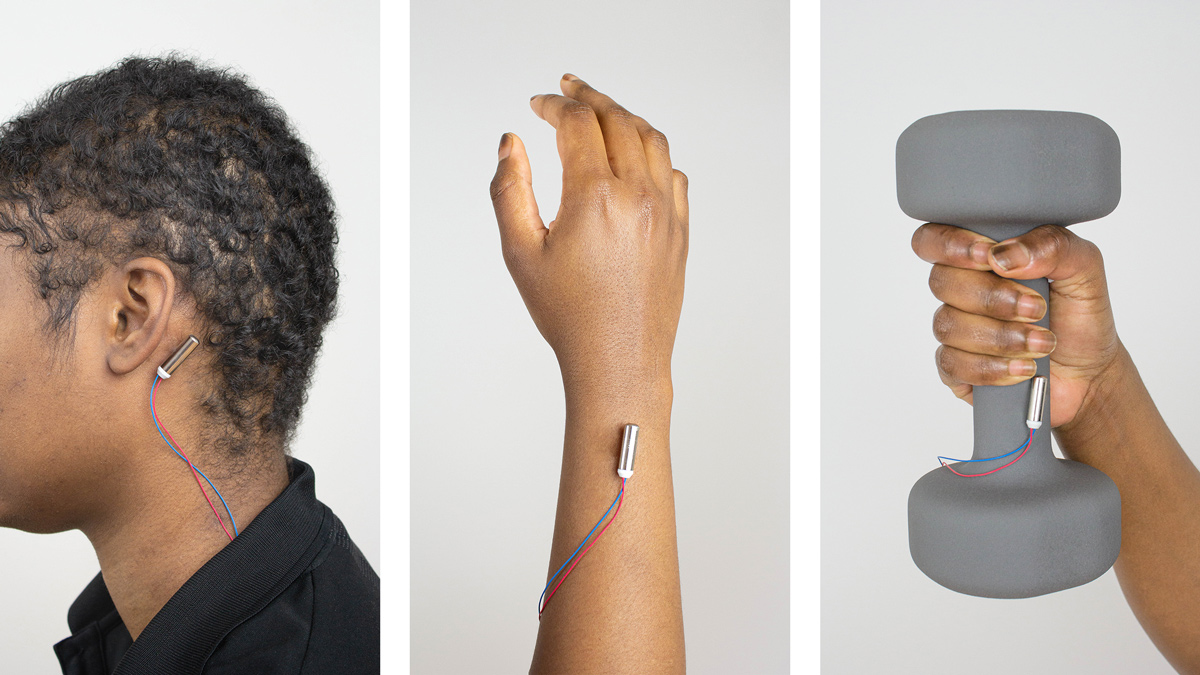

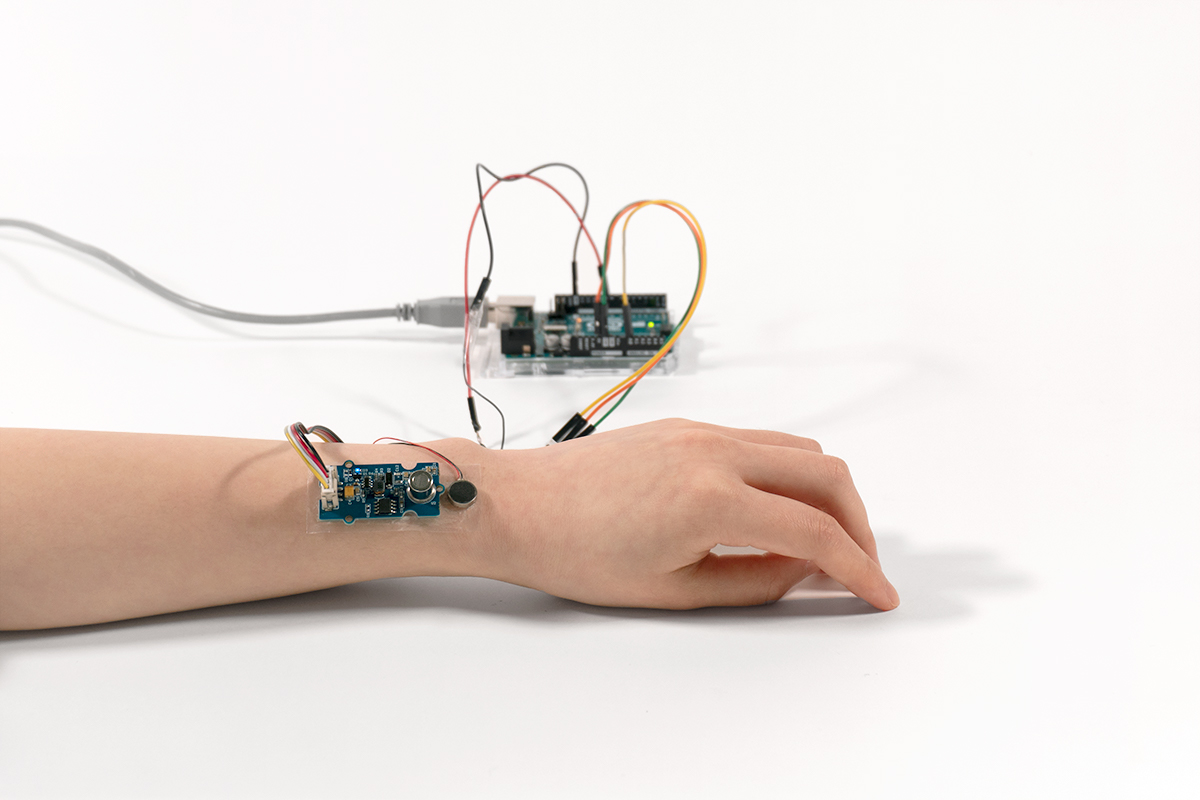

As for the output, the hand proved to be the most appropriate place to receive a ›touch‹ due to its high sensitivity. Vibration motors concealed in a slim band create the feeling of motion on the skin, as if someone is brushing the back of your hand.

It seems more relevant than ever to try new means that can help us feel a connection with each other. Especially when communication in the broadest sense is almost completely migrating to digital spaces, yet the physicality of our human bodies is not.

It seems more relevant than ever to try new means that can help us feel a connection with each other. Especially when communication in the broadest sense is almost completely migrating to digital spaces, yet the physicality of our human bodies is not.